Import your small and big data. Organize according to our templates, or define your own schema. Our format inference algorithm synthesizes parsing functions and lets you override if needed, without coding. Use our interactive import screens or CLI for bulk imports.

Import your small and big data. Organize according to our templates, or define your own schema. Our format inference algorithm synthesizes parsing functions and lets you override if needed, without coding. Use our interactive import screens or CLI for bulk imports. Your data is not just bytes. Solvuu automatically computes relevant summary statistics and generates rich interactive visualizations. Explore and gain insights into your data immediately; slice and dice as needed.

Your data is not just bytes. Solvuu automatically computes relevant summary statistics and generates rich interactive visualizations. Explore and gain insights into your data immediately; slice and dice as needed. Collaboration is essential to the scientific process. Share your data easily and securely with the rest of your team. Solvuu allows clear insight on who has access to what, and allows you to easily manage your sharing and privacy settings.

Collaboration is essential to the scientific process. Share your data easily and securely with the rest of your team. Solvuu allows clear insight on who has access to what, and allows you to easily manage your sharing and privacy settings. Import all your files of any size. We optimize storage, infer detailed format specifications, and validate complex customizable properties.

Import all your files of any size. We optimize storage, infer detailed format specifications, and validate complex customizable properties. Bring your tabular data to life. Collaboratively edit, enforce rigorous constraints, and co-locate metadata with your big data and workflows.

Bring your tabular data to life. Collaboratively edit, enforce rigorous constraints, and co-locate metadata with your big data and workflows. Rich interactive plots. Default views based on application and data types, and fully customizable when needed. Export publication quality images.

Rich interactive plots. Default views based on application and data types, and fully customizable when needed. Export publication quality images. Privacy is always the default. Optionally share with your team, or the world. Clear indications of who has access to what.

Privacy is always the default. Optionally share with your team, or the world. Clear indications of who has access to what. Data transferred securely and encrypted at rest. We follow industry standard security protocols with continuous monitoring.

Data transferred securely and encrypted at rest. We follow industry standard security protocols with continuous monitoring. No hardware. Install nothing; use everything. Dedicated hosting available.

No hardware. Install nothing; use everything. Dedicated hosting available. Command line interface for power users and bulk operations.

Command line interface for power users and bulk operations. Push data from your instruments, create custom GUIs, and build your own software in days instead of months.

Push data from your instruments, create custom GUIs, and build your own software in days instead of months. A complete record of every event. Truly reproducible workflows for publications and regulatory requirements.

A complete record of every event. Truly reproducible workflows for publications and regulatory requirements. Express genomics workflows as easily as formulas in a spreadsheet. Filter, map, reduce and compute statistics exactly as you need.

Express genomics workflows as easily as formulas in a spreadsheet. Filter, map, reduce and compute statistics exactly as you need. All your favorite open source algorithms are ready to use. Proprietary tools available with appropriate licensing, and you can add your own tools.

All your favorite open source algorithms are ready to use. Proprietary tools available with appropriate licensing, and you can add your own tools. Intelligent execution engine automatically parallelizes jobs and minimizes IO. Fully scalable.

Intelligent execution engine automatically parallelizes jobs and minimizes IO. Fully scalable. Every change to data and workflows is tracked. Roll back whenever needed. Use any version of all data and all tools.

Every change to data and workflows is tracked. Roll back whenever needed. Use any version of all data and all tools. We integrate with major repositories like GEO, SRA, COSMIC and more. Seamlessly refer to public data sets in your private analyses.

We integrate with major repositories like GEO, SRA, COSMIC and more. Seamlessly refer to public data sets in your private analyses. Your data is always yours. Easily export in industry standard formats, and directly submit to public repositories.

Your data is always yours. Easily export in industry standard formats, and directly submit to public repositories.

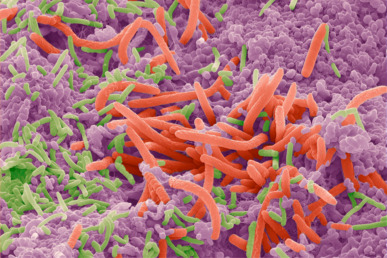

MicrobiomeTranslate your microbiome research into practical applications. Bring novel, safe and effective products to market faster.

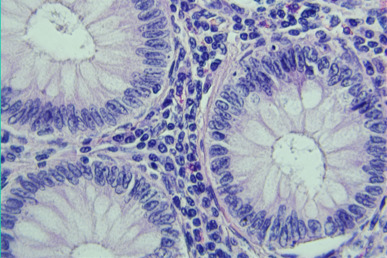

MicrobiomeTranslate your microbiome research into practical applications. Bring novel, safe and effective products to market faster. CancerIntegrate the right set of data science and collaboration tools, and achieve rapid advances in cancer therapeutics.

CancerIntegrate the right set of data science and collaboration tools, and achieve rapid advances in cancer therapeutics. AgricultureAccelerate research, enable innovation and create value by adopting effective digital technology solutions to improve crop productivity.

AgricultureAccelerate research, enable innovation and create value by adopting effective digital technology solutions to improve crop productivity.